Abstract

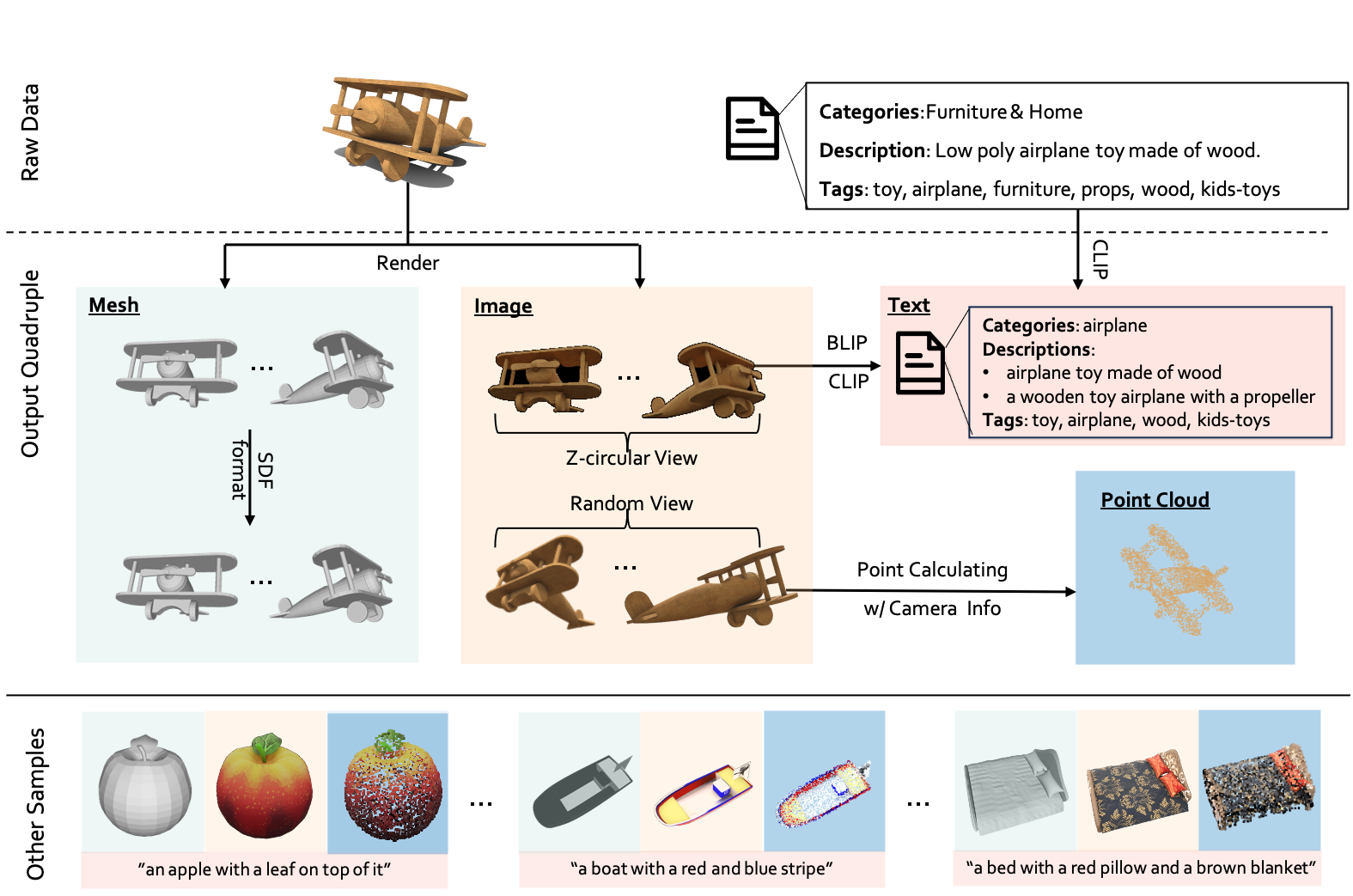

We propose UniG3D, a unified 3D object generation dataset constructed by employing a universal data transformation pipeline on Objaverse and ShapeNet datasets. This pipeline converts each raw 3D model into comprehensive multi-modal data representation

UniG3D offers three contributions:

1) Comprehensive Multi-Modal Data: We construct a large-scale unified 3D object generation dataset with rich textural information.

2) Universal Data Transformation Pipeline: We propose a universal data transformation pipeline that can convert any 3D data into representations suitable for most 3D object generation methods.

3) Valuable insights: To validate the efficacy of our dataset, we conduct experiments under various input conditions and target 3D representations. Based on our empirical investigations, we present several valuable insights into the impact of various conditions, the efficacy of data expansion, and the significance of text quality.

| Dataset | #Mesh | #PCL | #Image | #Text |

|---|---|---|---|---|

| UniG3D-ShapeNet | 500K | 50K | 1 million | 50K |

| UniG3D-Objaverse | 5 million | 500K | 10 million | 500K |

Table 1. The statistical information of the four representations in two UniG3D datasets.

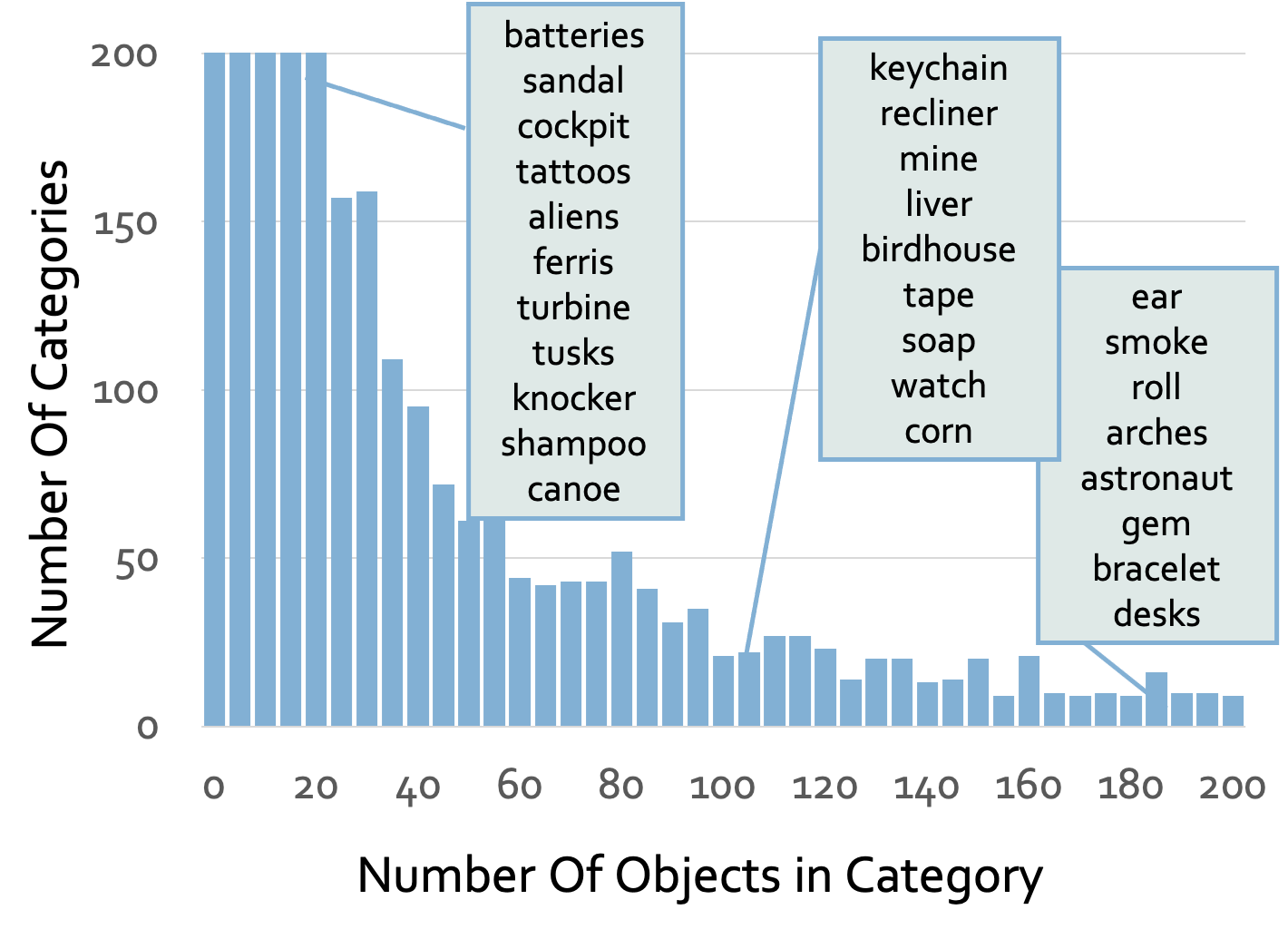

Figure 1. A histogram of fine-grained UniG3D categories with representative members from several bins highlighted..

Figure 2. (a) Word cloud of text information. (b) Examples of multi-modal data representation in UniG3D.

Due to the large storage space required by the entire dataset, we have not found a suitable way to release the quadruples other than text to the public. Therefore, we provide a detailed data transformation pipeline. Based on this pipeline, users can easily obtain data consistent with our dataset. Please refer to the script at readme.md for details.

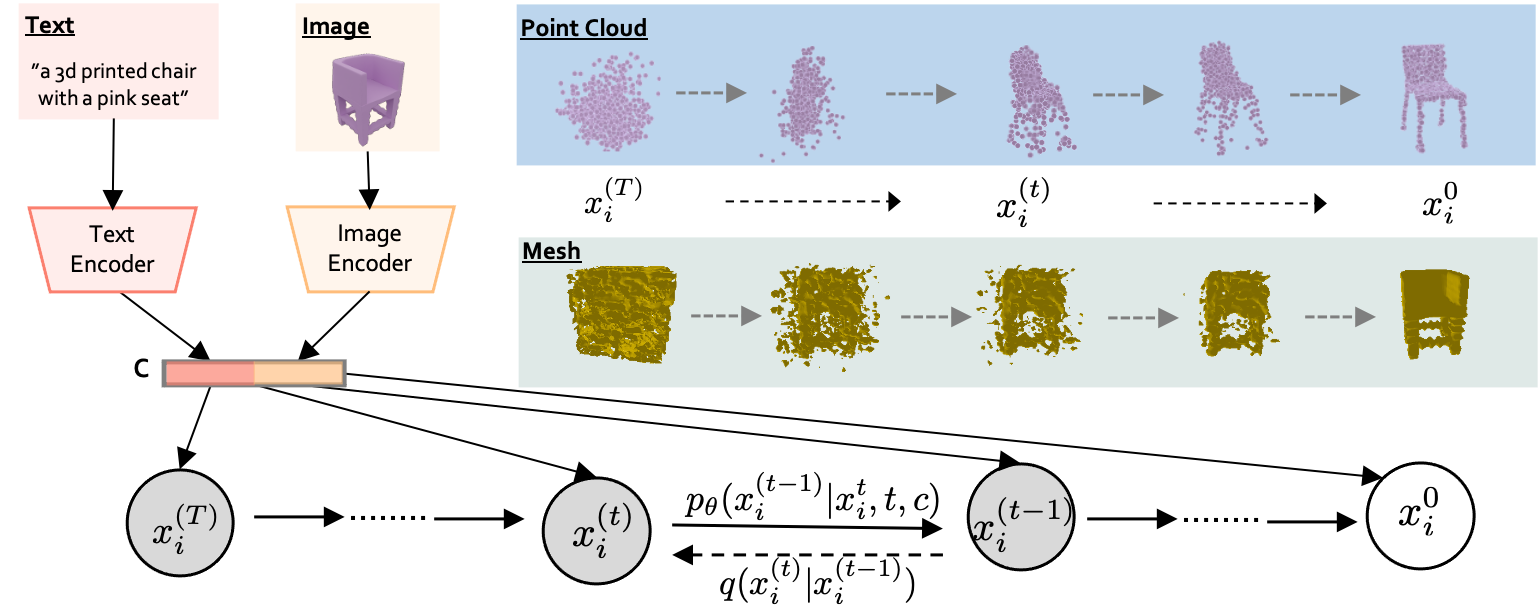

Figure 3. The directed graphical model depicts the generation process for 3D representations.

Implementation of two widely recognized methods for 3D object generation, tailored to the prevalent 3D representations of point clouds and signed distance functions:

1) Point-E method for point cloud generation: Due to the lack of training code provided in the official codebase, we develop and release our training code at: unig3d_point-e.

2) SDFusion method for signed distance function generation: We conduct our experiments by utilizing the official codebase.